High-sensitivity silicon retina for robotics and prosthetics

Conventional image sensors differ fundamentally from biological retinas, because they produce redundant image sequences at a limited frame rate. Understanding the principles by which biological eyes achieve their superior performance and implementing them in artificial retinas are scientific and technical challenges with far-reaching implications in the clinical, neuroscience, and technological fields.

Despite a more than 100-year effort to understand the retina, the function of most of its cells remains unknown. However, recent breakthroughs in genetics, viral trans-synaptic tracing, and two-photon, laser-targeted electrophysiology (see Figure 1) have made it possible to investigate the functions of major classes of brain cells. The retina is an ideal substrate on which to apply these techniques.1

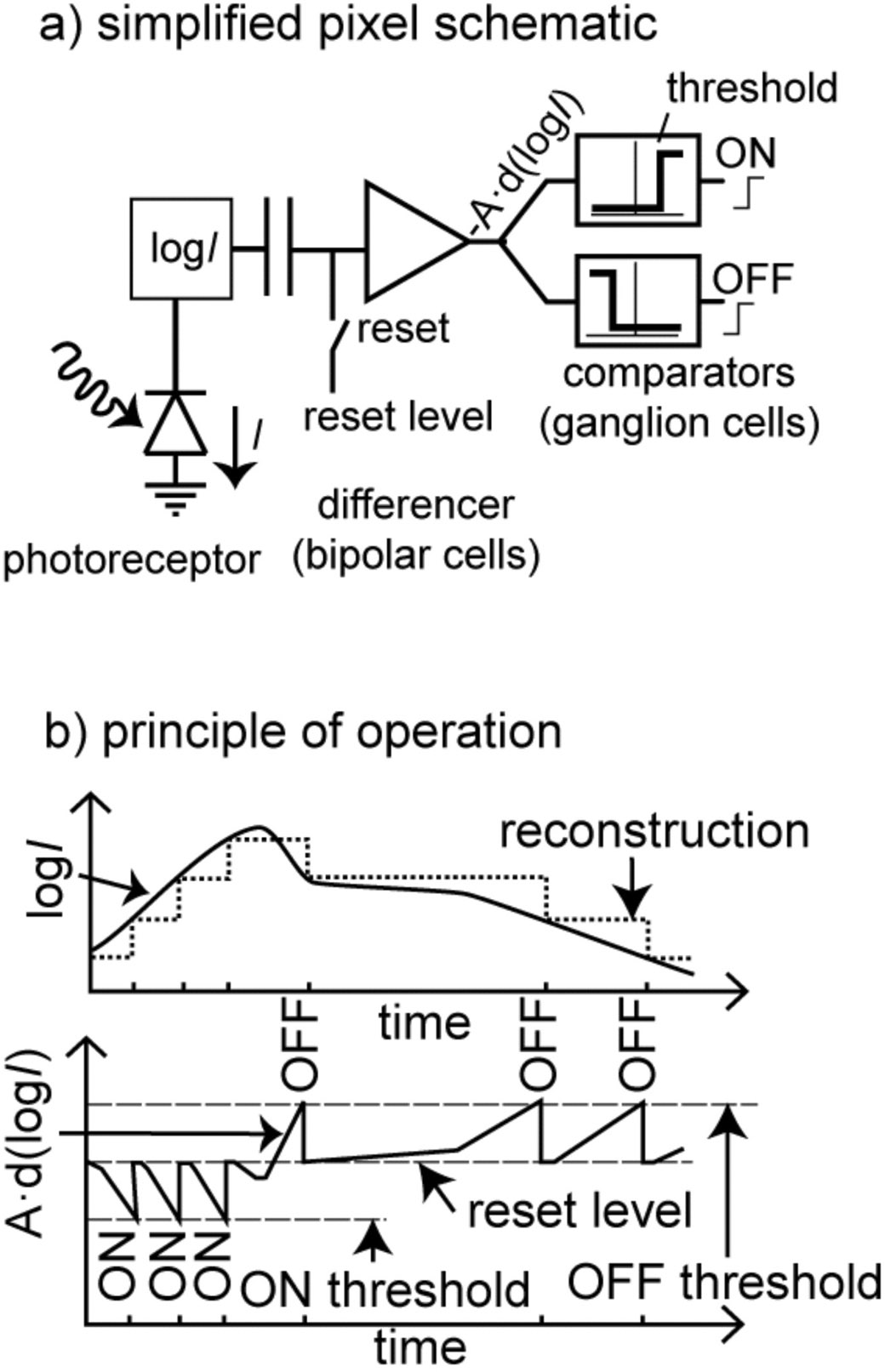

Experimental observations indicate that the visual system of a wide range of biological creatures ranging from insects to humans can be partitioned both anatomically and functionally into pathways specialized for spatial and temporal vision,1 suggesting that this specialization is fundamentally important for perception. The output spikes carried on the mammalian optic nerve have an asynchronous, event-based, sparse, and informative nature. This representation is vastly superior to the redundant image sequences generated from conventional cameras that perform perception tasks. Prior art in chips and systems claims to mimic the vision of different animals. But most of these implementations are based on very simple models consisting of an array of identical pixels using the photoreceptor element and some basic circuitry that, for instance, mimics the local gain control or spatial filtering properties of biological retinas.2, 3 Recent chips have included event-based readouts to mimic the asynchronous spikes on the optic nerve and reduce the output data amount or increase the effective frame rate (see Figure 2).4,5 Other designs focus on very specific image-processing functions claimed to be done very efficiently by the eyes of living beings.6

The range of applications of these silicon retinas remains limited, because of their low quantum efficiency and their inability to combine high-quality spatial and temporal processing on the same chip. Solutions to these technical challenges would revolutionize artificial vision by providing fast, low-power sensors with biology's superior local gain control and spatiotemporal processing. Such sensors would find immediate and widespread applications in industry, and provide natural vision prostheses for the blind.7, 8

With our SeeBetter program, we aim to design an integrated vision sensor that takes into account the latest research results in retinal functionality and applies them to advanced artificial vision sensors. In order to build such a system, the different types of cells present in the retina need to have a chip counterpart.

Since a biological retina is a 3D structure, the area used for phototransduction does not take away from the area for processing. In conventional (front-side illuminated) image sensors, any photodiode area that is used for phototransduction is not available for other circuits. If the fraction available for phototransduction (the fill factor) is small, there is a fundamental limitation on the achievable signal-to-noise ratio. This area tradeoff limits the functionality of conventional cameras and is the reason why conventional image sensors have very simple pixels and limited processing capability. In SeeBetter, we will built a hybrid vision sensor where the photodetection elements modeling the photoreceptors in the retina are fabricated in a different wafer than other silicon retinal cells. The two wafers are then interconnected using bump-bonding techniques. This hybridization brings about high quantum efficiency (see Figure 3), and an almost 100% fill factor without taking away from the area available for transduction, amplification, processing, and readout.9 To achieve high efficiency, the electronics can be fabricated in advanced CMOS technology without having to worry about the suitability of the technology for photodetection. This is important due to the complexity of retina cell models, and the need for a large number of photodetectors in order to get high vision resolution.

SeeBetter will address these problems through a multidisciplinary collaboration of experts in biology, biophysics, biomedicine, electricity, and semiconductor engineering. By succeeding in this effort, we will lay a foundation for future artificial vision systems grounded in biology's superior capabilities. The resulting sensor will also be an ideal front end for retinal prosthetics, since it attempts to mimic biological retina information processing and provides its output directly in the form of spikes similar to those carried on the eye's optic nerve. Moreover, it will be useful in a wide variety of practical real-time, power-constrained vision applications that have to process scenes spanning temporal scales from microseconds to days and illumination conditions from starlight to sunlight.

Apart from new machine vision and retinal prosthetics applications, future developments of this work will also go in the direction of using hybridization technology to combine different types of photo-detector layers (such as IR, UV, single-photon detectors) with the CMOS ‘retina’ electronics layer.

Konstantin Nikolic is the Corrigan Research Fellow in the Dept. of Electrical and Electronic Engineering. He was an associate professor at the University of Belgrade and a senior research fellow at University College London. His interests include mathematical biology, computational neuroscience, and bio-inspired technologies.

David San Segundo Bello is designing electronic systems and integrated circuits for image sensors and biomedical applications. From 2004-2008 he worked in the mixed-signal department in the wireline group at Infineon Technologies (currently Lantiq).

Tobi Delbruck is a physics professor at the Swiss Federal Institute of Technology (ETH) Zurich and is employed through the University of Zurich. His recent interests are in spike-based silicon retina and vision sensor design, event-based digital vision, and micropower visual and auditory sensors.

Shih-Chii Liu is group leader at the Institute of Neuroinformatics, which is part of both ETH Zurich and the University of Zurich. Her recent work focuses on spike-based silicon cochlear sensor design, event-based algorithms, and hardware neural architectures.

Botond Roska, group leader, was a Harvard Society Fellow at Harvard University from 2002-2005. His recent work focuses on the development and use of novel molecular and viral tools for neural circuits and optogenetic tools for restoring vision in retinal degeneration.