Doctor in your pocket: Here come smartphone diagnostics

If you’re lucky, a visit to your doctor consists of little more than a chat and a brief visual check of the ailment you had been worried about. More involved appointments will require the doctor to wheel out some (often large) medical device to make a diagnosis. But it may become more common for a doctor to whip out their phone to get to the bottom of your complaint.

“I use a free earthquake app for people who I think have Parkinson’s disease,” says Frederick Carrick, who maintains an international neurology practice, and holds senior research positions at the Bedfordshire Centre for Mental Health Research in association with the University of Cambridge in the UK, and, in the US, at the University of Central Florida College of Medicine. “Asking the patient to hold the iPhone, I can ‘see’ [on the app] a very low amplitude, say four-hertz, Parkinson’s tremor of the hand before it becomes visual.” He continues: “Actually, we jerry-rig a lot of the smartphone applications that are available for other purposes and put them into medical utilization.”

Carrick is part of a growing community of medical specialists, academic researchers, and entrepreneurs who are finding hacks, developing and testing apps, and engineering add-ons to smartphones that will provide high-quality health data on par with often bulky and invariably expensive traditional medical devices. The vision of this community is simple: to shift healthcare away from hospitals and place new tools for personalized diagnosis, health monitoring, and treatment guidance into the hands of clinicians and patients at the point-of-care. And to make this vision a reality is a device with high-resolution cameras, connectivity, a powerful processor, and a wide array of sensors that some 80 percent of the population has access to—the smartphone.

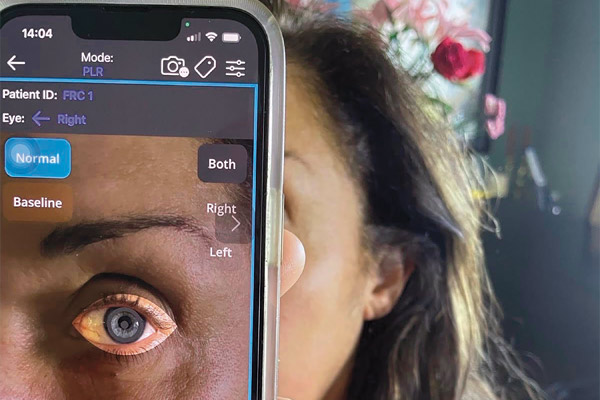

In 2021, Carrick led an international team of clinicians and health and technology researchers in an independent assessment of the smartphone app Reflex to gauge its usefulness in evaluating the recovery of patients with concussions. This is typically done by measuring their pupillary light reflex—the eyes’ response to light. The team wanted to see if the app could measure, with the same level of accuracy, the patients’ pupillary light response that had been recorded previously with a traditional pupillometer.

With the Reflex app open, the examiner held the smartphone camera up to one eye and tapped the screen, after which the camera’s light flashed and the results were recorded.

“We plotted Bland–Altman curves comparing the accuracy of one with the other and they were the same, which is phenomenal,” says Carrick. “The only difference is that you can’t use those traditional machines on a football field or in an emergency situation because they’re very sensitive to changes in ambient lighting—but we can do this using the app with the smartphone flash as a baseline.”

One of the first people to recognize the potential of harnessing mobile phones for health applications was SPIE Fellow Aydogan Ozcan, Chancellor’s Professor and head of the Bio- and Nano-Photonics Laboratory at the University of California, Los Angeles. In the mid-2000s, “back when smartphones were not smart,” Ozcan observed that a lot of global health problems—such as HIV, tuberculosis, and malaria—were rife in settings with poor access to technology. And yet most of the diagnostic solutions being developed at the time were expensive, delicate, high-tech optical microscopes unlikely to find their way to the places they were needed most.

“I was thinking ‘Why don’t we democratize microscopy imaging?’ That’s how it all started,” Ozcan recalls. “We hacked cell phones to reach the sensors and used their inexpensive CMOS imagers as the basis of computational microscopy, with the main mission of bringing advanced measurements at the microscale and nanoscale in a democratized way to extremely resource-limited settings.”

Proof-of-concept smartphone DNA analyzers. Photo credit: Ozcan lab/UCLA

By 2007, Ozcan and colleagues had cobbled together a working device. “LUCAS” (lens-less ultra-wide-field cell monitoring array platform based on shadow imaging) recorded the shadows of cells in a sample of liquid on its sensor plane, which were then sent through the microscope’s attached mobile phone to a computer, where custom algorithms identified and classified the cells.

As mobile phone technology evolved into the early versions of today’s smartphones, so, too, did Ozcan’s creations. “We were riding a wave of smartphones getting better, with improved optics helping us go from barely seeing red blood cells, which are about seven microns, to seeing bacteria, viruses, and single-molecule DNA at the nanoscale in a matter of a couple of years,” he says.

At the heart of all of Ozcan’s achievements in mobile-phone-based microscopy are compact, inexpensive, and abundant CMOS image sensors. With these, his team developed holographic lens-free microscopy. The technique removes all bulky and expensive optical components and instead places a specimen directly on top of a CMOS image sensor. The specimen is illuminated by a nearby partially coherent light source, which creates an interference pattern captured by the sensor. The resulting data is fed into custom algorithms to create images. By the mid-2010s, Ozcan’s group had refined this method, and developed more sophisticated algorithms and super-resolution techniques to the point that some results were far beyond what he could have imagined when he started down this path.

For example, in 2017, his group and international collaborators published results from a proof-of-concept study wherein they had built a handheld smartphone microscope that enables in-situ mutation analysis and targeted DNA sequencing. The technology consists of a 3D-printed optomechanical attachment for the phone containing two laser diodes for fluorescence imaging and an LED for bright-field transmission imaging. In operation, a tissue sample is treated with chemicals that tag DNA bases with fluorescent markers. When the sample is placed in the device, it records multimode images and analyzes them to read the DNA sequence or to identify genetic mutations.

With the device’s potential to be manufactured at less than one-tenth the price of clinical multimode microscopes, Ozcan sees future clinicians in resource-poor settings using it to identify the genetic make-up of cancerous tumors, offer optimal and personalized treatments for diseases, or even quickly identify effective treatments during disease outbreaks.

Today, Ozcan is riding a second wave of technology: artificial intelligence (AI). “In one line of research, we have shown that aberrations and resolution limitations of smartphone-based microscopes due to the poor optics of smartphone cameras could be eliminated with deep neural networks,” he says. The team imaged thousands of tissue samples with their smartphone-based microscopes and then used a diffraction-limited traditional microscope as ground truth. With this, they could apply a neural net that transformed relatively poor-quality smartphone images into super-resolution ones.

Next, they tested the technique for detecting sickle cell disease, an inherited blood disorder and leading cause of child mortality in sub-Saharan Africa and elsewhere. “We took thin smears of blood from hundreds of patient samples, enhanced their microscopy images with a neural network, and then with a successive neural network identified and counted sickle cells,” Ozcan explains. “It gave us medical-grade results matching what diagnosticians would achieve with a diffraction-limited pathology microscope.”

Another trailblazer focusing on combining the power of AI with smartphones to democratize healthcare is Shwetak Patel, director of Health Technologies at Google, and a computer science and engineering professor at the University of Washington. At the 2023 Lindau Nobel Laureate Meeting in Germany, Patel introduced some of the applications he and his team have been working on.

“The camera alone is very powerful,” he told the Lindau audience. “Just using [a smartphone’s] front-facing camera and looking at the screen, you can get passive heart rate data, which is actually as good as contact data.” Patel explained further that associated computer-vision algorithms and AI are now skin tone agnostic, and still work when a user is moving their head about, so that heart-rate data can be extracted from any person continuously to investigate, for example, the blood flow between different parts of the face.

More recently, Patel explains that an AI-souped-up smartphone camera can be combined with other native smartphone capabilities to delve deeper into heart health. “We use the microphone and the speaker and hold the phone to your chest to hear the sound of the heart—essentially, the valves opening and closing. But then, with your finger over the camera, or maybe the camera pointing at your face, you get the heart-rate signal as the blood flow goes from the heart to the face or to the finger, and you can bring those datasets together to do a cardiovascular assessment.”

A different line of research for Patel’s team combined the broadband white-light source of the camera flash with the smartphone’s main camera to form a visible-light spectrometer. By placing a user’s finger over the flash, the technique allowed the researchers to estimate the percentage of hemoglobin versus plasma in the blood—with results comparable to a medical-grade hemoglobin device. Next, they developed an app around the technology and pushed it to all the community health workers in Peru, where, Patel said, “half the kids under the age of three are anemic. Rather than taking a few months, maybe a year, to screen all the kids, with our approach you can do that in possibly three weeks.”

These examples represent just a small fraction of the smartphone healthcare capabilities being unlocked by researchers and innovators around the world. Yet smartphone health monitoring and diagnosis remains a relatively obscure area. Why?

Brady Hunt, a clinical assistant professor of medicine at Dartmouth College, has explored the utility of smartphones for healthcare applications in great detail, publishing a 2021 critical review on the topic with collaborators in the Journal of Biomedical Optics. From his analysis, he notes that “there’s not a widely used smartphone-based imaging system in healthcare today.”

Many smartphone apps can be jerry-rigged for medical applications, for example, the Reflex app that can evaluate the recovery of patients with concussion in lieu of a traditional pupillometer. Photo credit: Frederick Carrick

Hunt believes that’s partly because some researchers focus first on prototyping novel medical imaging techniques in a smartphone before really thinking about whether it is the best vehicle for delivering that capability.

“The way that data flows in a smartphone is not like a medical device, in terms of the way it’s manufactured, access to the sensors, and the precision with which they’re calibrated,” says Hunt. “And researchers need to justify the design of their device such that it’s appealing for clinicians, and not developed in a total vacuum relative to the clinical workflow of the people who might use it someday.”

In his review, Hunt formulated six guidelines to evaluate the appropriateness of smartphone utilization: clinical context, completeness, compactness, connectivity, cost, and claims. He says these guidelines can be boiled down to one simple question “‘Should you be using a smartphone or a Raspberry Pi in this situation, and why? ‘The better you can answer that question, I think the stronger your smartphone-based system will actually be.”

Patel sees other impediments to large-scale adoption. “In the course of the last decade, I think there’s been a lot more bullishness from the clinical community to try these apps and approaches, but there’s still some pushback in terms of different metrics and how we think about consistency evaluation, which I think are very valid criticisms,” he says. “But I think the big one is that we haven’t brought it all together into a cohesive experience yet—it’s still very fragmented.” In Patel’s opinion, like the Star Trek medical tricorder, a single smartphone app delivering personalized diagnosis, health monitoring, and treatment guidance across a range of conditions would catapult smartphone-based healthcare into the mainstream.

Despite these barriers and current limitations, Hunt, Patel, and Ozcan are optimistic about how smartphone-based systems will impact future healthcare. Hunt hopes that they will be able to marry low-cost sensors with appropriately developed AI systems that can help a medical general practitioner do more specialized tests in a healthcare setting.

Ozcan is most excited by the potential of future smartphones having a more cohesive design of front-end optics in line with back-end AI. “If we encode the information for AI to analyze—instead of encoding it for humans to look at it—that’s going to open up new designs that are simpler and yet more powerful to look at more sample volume, with higher resolution, with larger depth of field, and more specificity,” he says.

Meanwhile, Patel envisions new photonics approaches being the linchpin of future smartphone-based health applications. “With smartphone cameras today, we can look at things on the dermis level, but there’s some really cool things you can do with different wavelengths of light and different spectroscopy approaches that can take us several notches deeper,” he says. “Thinking about different levels of body penetration using clever photonics approaches would be a next big step for smartphone technology in the healthcare space.”

Benjamin Skuse is a science and technology writer with a passion for physics and mathematics whose work has appeared in major popular science outlets.