Inventing the future

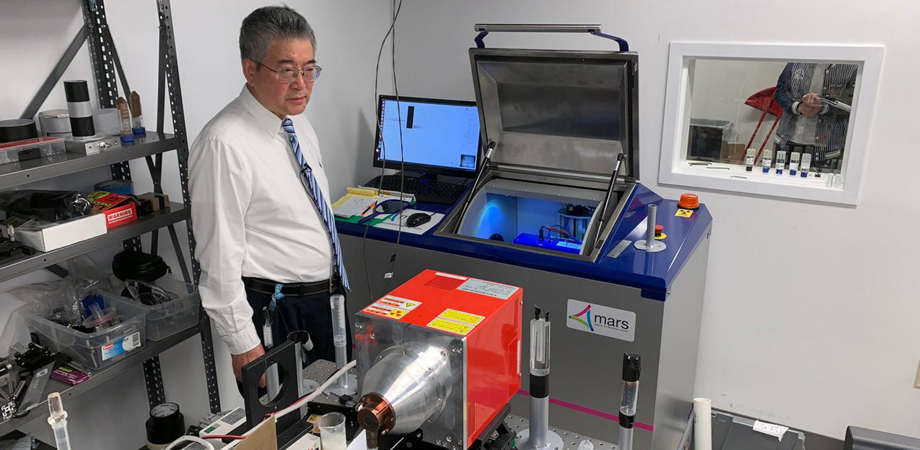

Ge Wang is the Clark and Crossan Endowed Chair Professor and Director of the Biomedical Imaging Center at the Rensselaer Polytechnic Institute. At the AI-based X-ray Imaging System (AXIS) lab, Wang and his team focus on innovation and translation of x-ray computed tomography, optical molecular tomography, multi-scale and multi-modality imaging, and AI/machine learning for image reconstruction and analysis.

Wang was a plenary speaker at SPIE Optics + Photonics, where he discussed x-ray imaging and challenges in the "Wild West of AI."

Can you explain what AI can provide to the medical-imaging community and patients?

Medical imaging involves a huge amount of complicated data. AI holds a great potential to extract information from such big data in unprecedented ways. AI-empowered data processing, image reconstruction, and image analysis can make healthcare more precise and more intelligent. AI-empowered robots are also medically relevant. All of these will benefit the patients.

Are there features in x-ray imaging that lend themselves particularly well to deep learning, or can the tools and processes developed be used with other imaging modalities?

X-ray imaging, especially computed tomography (CT), is one of the most popular imaging modalities for medical diagnosis and intervention. The interest in x-ray imaging research and development includes higher spatial resolution, lower image noise, better contrast resolution, richer spectral information, fewer image artifacts, faster imaging speed, and so on. How to achieve these targets based on incomplete, inconsistent, and imperfect data remains a great challenge. In this context, deep learning can bridge the gap between raw CT data and desirable imaging performance. Similarly, other imaging modalities can benefit from AI methods as well.

What are the current constraints in using AI for medical imaging?

AI-based imaging is an emerging field. It is well known that deep neural networks are vulnerable to adversarial attacks, may suffer from suboptimal generalizability in the cases of new datasets that are differently distributed (say, collected on a different imaging device), and cannot be thoroughly characterized in terms of the learning mechanisms and solution properties. Also, lack of well-labeled big data and limits in computing power are still constraints in the medical imaging domain, often times when we solve truly 3D or even higher dimensional imaging problems. These represent great opportunities for basic research and clinical translation.

What new developments or advances at your AXIS lab are you most excited about?

We are closely collaborating with General Electric, Massachusetts General Hospital, Memorial Sloan Kettering Cancer Center, Cornell University, Wake Forest University, Cedars-Sinai Medical Center, and other institutions to address various aspects of deep tomographic imaging. We are excited by all these projects and grateful for funding from NIH and industry. One recent result is our Analytic, Compressive, Iterative Deep (ACID) network developed to stabilize tomographic imaging, which integrates different algorithmic ingredients for superior image quality. As another example, a deep network is usually considered as a black box, and research is in progress to make the black box more transparent, making the box "grey" or "white." We are exploring possibilities to make the box "green" — letting it adjust its parameters in a biomimic fashion.

This is a great quote from one of your earlier interviews: "X-ray and optical are highly synergistic modalities working together. This is a perfect marriage." Can you elaborate on this with specific examples of your current research?

We are working on an NIH academic-industrial partnership project to combine photon-counting micro-CT and fluorescence life-time tomography for preclinical studies on a mouse model of breast cancer. From such a micro-CT scan, we can extract material properties and contrast-enhanced pathologies. These serve as prior knowledge for optical molecular tomography in terms of optical background compensation and synergistic analysis on drug delivery. AI imaging methods are being developed for x-ray micro-CT, optical molecular tomography, and the seamless fusion of these two imaging modalities. This is an example showing that AI can be used as a unified software framework for multimodality imaging.

What originally led to your interest in biomedical imaging?

Since I was a graduate student, I have dedicated my whole career to biomedical imaging. This is a highly interdisciplinary field, allows wide and wild imaginations, offers many challenges and learning opportunities, and demands amazing collaborations globally. Its healthcare relevance gives us a deep satisfaction in helping others with magic imaging methods.

When did you realize that AI was a viable and enhancing tool for medical imaging?

My team has been working on machine learning-based imaging methods over the past decade. For example, we published the first dictionary learning algorithm for low-dose CT reconstruction in IEEE TMI in 2012, through collaboration with Xian Jiaotong University in China (Prof. X. Q. Mou and Dr. Q. Xu), Wake Forest University (Prof. H. Y. Yu) and General Electric (Dr. J. Hsieh). Dictionary learning is a pre-cursor of deep learning. Since 2016, we have been focusing on deep learning based tomographic imaging — right after I attended the AAAS Symposia "Technology of Artificial Intelligence" on Feb. 13, 2016. I was deeply inspired by the presentations on AlphaGo and natural language processing (NLP) in that AAAS Symposia, and I wrote the first perspective on deep tomographic imaging.

You published the first helical/spiral cone-beam/multi-slice CT algorithm in 1991. Can you explain how this algorithm works? Was there a critical turning point that led to its discovery?

Truly volumetric CT, rather than classic slice-by-slice fan-beam tomography in the "step-and-shoot" mode, revolutionized medical imaging. Volumetric CT scanning in the spiral/helical cone-beam/multi-slice mode uses a powerful x-ray source and a 2D detector array (multiple detector rows) on a slip ring to generate a continuous stream of cone-beam data. In 1991, I developed the first mathematical analysis and algorithm for spiral cone-beam CT, and subsequently wrote many papers with my collaborators in this area.

Imaging authorities Defrise et al. wrote "To solve the long-object problem, a first level of improvement with respect to the 2D filtered backprojection algorithms was obtained by backprojecting the data in 3D, along the actual measurement rays. The prototype of this approach is the algorithm of Wang et al." Currently, there are ~80-million medical CT scans annually in USA (~200-million scans yearly worldwide), with a majority in the spiral cone-beam/multi-row-detector mode.

The problem was posed by Professors P. C. Cheng (SUNY/Buffalo) and D. M. Shinozaki (University of Toronto) to image long and thin material samples with x-rays. This need inspired me to develop various helical/spiral cone-beam CT algorithms. As is often true, a good question leads to a novel answer and may impact diverse areas — in this case, material science/engineering, clinical/preclinical imaging, and airport security screening. Necessity is the mother of invention; I like Virginia Tech's former tagline "Invent the Future."

See Ge Wang's presentation "X-ray imaging meets deep learning."

| Enjoy this article? Get similar news in your inbox |

|