Surveillance gives the big picture

Information on the environment is one of the most critical parts of any tactical operation, whether civilian or military. This doctrine of visual situational awareness demands that field personnel be cognizant of as many of the operational parameters in the environment that may influence the mission outcome as possible. Visual intelligence, for example, can provide data on the location of friendly and enemy forces, location of non-combatants, terrain openings and obstacles, tactics, and other mission intelligence.

Traditionally, situational awareness begins with a pre-mission briefing and is maintained through radio traffic updates from command centers and field operatives; however, this data is gone as soon as it is distributed through the network. To access the data again, the field personnel must request a re-transmission that ties up the command, control, communications, computers, and intelligence (C4I) system with redundant information. Additionally, data accuracy is limited by the ability of the disseminator to describe the situation and is usually relative to the geographic or chronological point of acquisition.

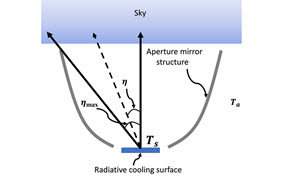

To maximize the effectiveness of visual intelligence, it is necessary to collect and disseminate data with maximum resolution and minimum delay. Sensor networks provide a good method for achieving this goal. One such network is the Integrated Digital Video Surveillance System (IDVS2), a CMOS-based system that can gather and collate digital video data from sensors throughout the tactical environment and automatically distribute that data directly to field personnel (see figure 1). The system provides up-to-the-minute data using video sensors, target overlay features, and data delivered by wireless transmission from pre-surveyed and deployed sensors, airborne micro-unmanned aerial vehicles (UAVs), and field deployable video sensor systems. A tactical data center (TDC) combines the data from these distributed sensors and relays it to the combatants in the field, who receive it on wearable computer systems and view it on head-mounted displays. Under a U.S. Defense Advanced Research Projects Agency (DARPA) sensor development program run by Edward Carapezza, Xybion Electronic Systems (San Diego, CA) has been developing CMOS video detection technology that will make this situational awareness system possible.

CMOS technology has been a major driver of this development by enhancing functionality through on-chip integration while minimizing imager size, power consumption, and weight. Compared to CCD cameras, CMOS cameras may be constructed with significantly less external (non-sensor) hardware because much of the required circuitry can be located directly on the imager substrate. While this topology approach introduces some disadvantages, including elevated noise generated by on-substrate electronics (see oemagazine, February 2002, page 30), the resulting small size provides significant advantages for civilian and military tactical environments and makes the technology ripe for exploitation.

challenges and advantagesSmall size is an absolute requirement for the next generation of micro unmanned air vehicles (MAVs) if they are to be efficiently deployed by soldiers in the tactical environment. An MAV with a CMOS digital video sensor can provide real-time forward reconnaissance without putting troops in harm's way. An integral part of the IDVS2 concept, MAVs contain miniature subsystems for propulsion, guidance and navigation, communication, power, and sensing. Since MAVs are designed to be hand-carried and launched, each of the above components must be as compact, lightweight, and inexpensive as possible to ensure system viability. While it may be possible to command the vehicle to return to base following the mission to keep costs down, it may not always be practical or desirable. A low attrition rate would certainly reduce overall system cost-of-ownership, but from a tactical point of view, an aerial vehicle pointing the way toward its operating base could be disastrous.

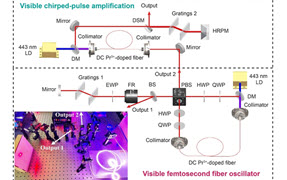

Our R&D teams are refining a variety of technologies suitable for these unmanned sensors, including high-resolution monochrome, multiple-field-of-view (FOV) multiplexed sensors, and tunable multispectral sensors. Each approach is based upon the sensor developed for DARPA.

The 1280 x 1024 pixel CMOS sensor presents both opportunities and challenges, based on sheer volume of data produced. The opportunities are centered on the resolution, digital output, windowing capabilities, and low power requirements of the technology. The challenges are focused on optimization of technical parameters associated with a new technology. CMOS imagers are still unable to achieve modulation transfer functions as good as those of equivalent CCD sensors, for example. They also require snap-shot shutters to remove distortion associated with scene motion that would otherwise be detected by a rolling shutter imager.

Finally, to realize the full resolution of the imager, the wireless data transmission system must be upgraded well beyond the capabilities of single-channel ground and airborne radio system. New developments in coded orthogonal frequency division multiplex (COFDM) transmission hold promise for increasing data throughput and providing immunity to data loss due to multi-path distortion in a given radio frequency channel bandwidth. This data loss is caused by the reflection of radio signals off obstacles in the environment, which cause the signal to arrive at the receive point at different times. With COFDM, the signal cancellation caused by multi-path is coded out and data recovery is robust. The volume of data generated by systems such as IDVS2 will require very high bandwidth wireless systems.

Using windowing, it is possible to dynamically configure or parse the resolution of the imager during a mission. 'Roam' and 'zoom' are bandwidth reduction features that take advantage of the high pixel count of the imager without requiring the use of all pixels at one time. Roam refers to real-time windowing processes that allow the user to scan the field-of-view with a user-defined subset of pixels that simulate the effect of placing the sensor on a mechanical pan-and-tilt stage. After target acquisition, the user may zoom inincrease sensor resolution by increasing the density of pixels imaging the target, moving from detection to recognition, and ultimately, to identification.

The transmission time increases as the number of pixels on target goes up. Future advances in digital video compression may help mitigate this issue. Digital electronic zoom can magnify the image and enhance recognition without increasing resolution or transmission bandwidth. This feature is less expensive and less complicated to implement than optical zoom.

to the next level

Taking advantage of the digital output of the CMOS image sensor, Xybion is developing a multiplexed sensor head design to meet the requirement of multiple fields of view from a common platform (see figure 2). The design steps incorporate three fixed FOV imagers to synthesize a super-wide FOV. The wide swath means fewer passes over the target area. When a target of interest is located in the super-wide image, the fourth imager with a narrow-field-of-view can be downlinked to provide positive target identification. Additional applications include a full 360° imager that can be thrown or dropped into a dangerous environment to provide valuable situational awareness. A system such as this is useful for hostage situations or barricaded suspects.

Under a concurrent effort with NASA's Jet Propulsion Laboratory (Pasadena, CA), Xybion is exploring the development of a multispectral imager that combines visible to IR tunable filters with CMOS imagers. Spectral discrimination is a well-proven technology for distinguishing objects of interest from background clutter. Miniature multispectral sensor systems can fly aboard compact UAV platforms to locate mines and minefields, detect camouflage against an organic background, and provide clues to the presence of inorganic material in an organic environment (see figure 3).

sensor fusionIndividually, each of these CMOS imager applications has merit and can increase situational awareness in the tactical environment; however, such data can be best leveraged by integrating the sensors into a network that provides a comprehensive assessment to forces on the ground. Such a system combines detailed imagery from the ground with baseline imagery from overhead assets such as orbital or high-altitude UAV, to produce a composite image product. For civilian operations, law enforcement helicopter camera platforms can provide the wide area coverage.

The key to combining independently acquired imagery is spatial registration. Integrating GPS data with the digital video data in the imager bit stream provides the computer system with a reference point for each set of image data. The master computer located at the TDC maintains the baseline video data as a 3-D or 2-D database. The live-action data from unattended ground sensors and MAVs or other mobile and fixed platforms is fed into the TDC via high-speed wireless network. The master computer then augments the wide area scene with the real-time detailed video imagery to provide enhanced situational awareness.

In order to conserve transmission bandwidth and/or reduce transmission time, each field sensor need only forward difference data after establishing a detailed scene exchange with the master computer. The master scene can be automatically updated on a regular basis to ensure that the sensor data accurately shows the environment it is surveying.

In the field, the user has the ability to select the area of coverage based on his or her current GPS location. For example, a soldier may be interested in real-time situational awareness for a radius of 100 m while advancing on a target. However, during an engagement, 30 m of coverage may be sufficient. At the command post, on the other hand, the entire TDC database is available to commanders for a complete bird's-eye view with the capacity to zoom in and view detailed imagery anywhere in the sensor network coverage area. As the engagement moves toward the objective, new sensors are added to the network, allowing the video mosaic to dynamically develop. These sensors can include fixed ground sensors or sensors introduced by hand launched MAVs, ballistic launches (mortar or small arms), or other aerial vehicles.

Based on CMOS sensor development and recent advances in video signal processing, such an integrated system could be produced and deployed within the next decade. Future advancements in high-speed wireless data transmission and continued miniaturization of video imaging, processing, and display technology will result in affordable, reliable, lightweight man-portable systems. Integrating sensor data into a real-time, ever-present, on-demand, high-resolution database will significantly enhance situational awareness on battlefields, resulting in improved tactics and outcomes in the battle environment. oe

Acknowledgements

This work has been sponsored by the U.S. Defense Advanced Research Projects Agency (DARPA) sensor development program.

Christopher Durso is director of business development at Xybion Electronic Systems Corp., San Diego, CA.