Medical imaging and artificial intelligence: naturally compatible

Machines should do the tedious, monotonous, and time-consuming tasks, so that humans can take healthcare to the next level.

"As an experimental psychologist, I have always been interested in trying to discover why images are viewed and interpreted differently by different people," says Elizabeth Krupinski, professor and vice chair for research in the Department of Radiology and Imaging Sciences at Emory University in Atlanta, Georgia. Her work is designed to better understand the perceptual and cognitive mechanisms underlying the interpretation of medical images. The goal: to improve patient care and outcomes through better training, reduction in errors, and optimization of the reading environment.

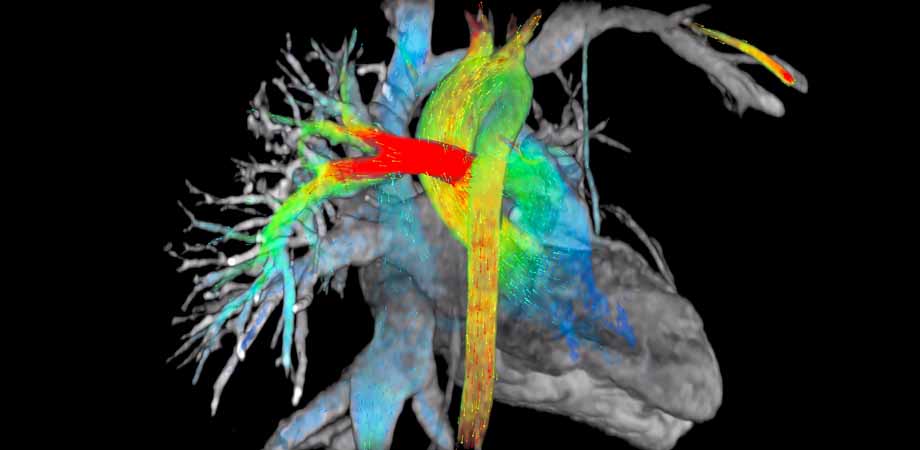

Krupinski notes that interpretation in medical imaging is particularly important, since differences in interpretation can lead to errors and directly impact patient care. Throughout her career in radiology, she has conducted numerous studies assessing the impact of how image data are presented to the radiologist, and how the addition or change in data format or content can impact diagnostic efficacy and efficiency. This has included everything from the actual display medium - whether film, monitor, or smart device - to how images are processed, and how "decision-support" outputs are incorporated into the interpretation process. Decision-support tools originally took the form of computer-aided detection and/or diagnosis (CAD) prompts, and that has steadily evolved into artificial intelligence (AI) applications in radiology, says Krupinski.

On 4 February, Krupinski addressed the SPIE Fellow Member Luncheon with a talk entitled "Medical Imaging: The Need for Human-Artificial Intelligence Synergy." She pointed out how AI is changing the field of medicine, particularly in clinical imaging. Instead of eliminating the role of radiologists, pathologists, and other image-based specialists, Krupinski explained how AI will help increase efficacy and efficiency. However, she warned that optimizing the integration of AI into medical imaging would demand careful consideration of the human user.

With colleagues at Emory, Krupinski is currently working on collaborative research involving imaging sciences, biomedical informatics, and other medical specialties. Projects include not only the more traditional use of AI to detect lesions, and segment and measure images, but also exploring ways to use AI to go beyond detection and provide more diagnostic information by combining imaging with related data from the electronic medical record; addressing workflow issues; analyzing report quality; and many other potential applications. Other image-based specialties such as pathology, dermatology, and ophthalmology are also looking to radiology for guidance in ways to incorporate AI pipelines into their research and clinical workflows.

The potential of pigeons

In a 2016 SPIE Proceedings article, "The potential of pigeons as surrogate observers in medical image perception studies," Krupinski writes about using pigeon models "as a surrogate for the human observer." Part of her conclusion is: "These early results suggest a novel method or tool that has the potential to help us better understand the mechanisms underlying medical image perception."

Despite the intriguing title, the goal of the study wasn't to use pigeons to diagnose images clinically, but rather to gain an understanding of how visual learning takes place, and which types of visually learned tasks generalize well to some applications, but not to others. Working with the pigeons could also narrow down experimentally the specific types of image manipulations (e.g. compression) that are more or less likely to impact the detection and discrimination performance of humans - so that human resources would be better expended on the most clinically relevant tasks.

Krupinski explains that the insight this study provides to those developing or using AI in medical imaging is two-fold: "On the one hand, it speaks to the fact that each image interpreter - human, computer, or animal - ‘sees' the image data in a different way, and thus performs differently at a given task," she says. "Understanding these differences can lead to insights into how we can improve any of these systems, including AI and humans.

"On the other hand, it reinforces the idea that the goal should not be to replicate exactly what the human eye-brain system does, since we are limited in what we see by our own physiology. Pigeons see features in their environment that humans don't see, and vice versa. The same applies to AI."

Elizabeth Krupinski demonstrates the eye-tracking device used in her fatigue studies.

One aspect of the human user that has influenced Krupinski's work in AI for medical imaging is fatigue. Over the past few years, with colleagues at Emory, the University of Arizona, and the University of Iowa, she has investigated the role of fatigue and its impact on radiologists and radiology residents.

A typical workday for a radiologist can be 10-12 hours long, and often those hours are spent poring over images at a workstation. Tiredness and difficulty in focusing can affect diagnostic accuracy. Krupinski and her co-workers have found evidence that visual search patterns are impacted by fatigue, potentially reducing search efficiency and extending the time it takes to interpret individual cases. "All of this evidence points towards one inescapable conclusion: radiologists, especially residents, are often experiencing significant fatigue, reducing their ability to focus, and hence their diagnostic accuracy," she says.

Krupinski also argues that CAD and other analysis tools can assist radiologists, by optimizing images and detecting features that the radiologist may be less sensitive to, or even unable to perceive in the same way that a computer can. She adds that such tools need to be properly integrated into the clinical reading workflow, and not be an impediment to efficiency and accuracy.

Bright future, with a caveat

Krupinski notes that although the future of technologies like AI, AR/VR, and robotics in medicine is bright, the physician is still the ultimate decision-maker, and will remain so for the foreseeable future. "AI will be making some decisions and enabling radiologists' decision-making as well," she predicts. "One of the reasons for variability in radiologists' interpretation of images is the huge variability in the images themselves - both in terms of ‘normal' features and lesions."

In her opinion, this is also why AI will never be 100% accurate either. The medical imaging community should be proactive in deciding which tasks (and why) are most appropriate for AI to carry out, and which tasks are best suited to the human radiologist, assisted by additional information/intelligence, either human or artificial.

When asked if she thinks the issue of doctors becoming isolated from patients will be exacerbated by the use of AI, her answer is "not at all."

"I think AI opens paths for better and more informative communication between physicians and patients," says Krupinski. "If AI can relieve clinicians of redundant, time-consuming tasks (such as measuring size changes in lesions over time with treatment), that are readily and often more accurately and consistently done by computers, clinicians will have more time to dedicate to the actual decision-making process and to interact with patients."

Last year, in a live Point/Counterpoint debate in the AAPM Virtual Library entitled "Artificial Intelligence will soon change the landscape of medical physics research and practice," Krupinski seems to take the opposing side of the for-or-against AI discussion. She noted that while AI will definitely revolutionize healthcare and thereby medical physics, it's imperative to understand that it will not (or perhaps should not) do what many are afraid it will - take over the roles and responsibilities of the doctor, radiologist, or other medical professionals.

A key role for many medical professionals is education and training of junior-level healthcare colleagues. Krupinski points out that while AI can certainly be used to develop and provide a variety of training tools, it cannot sit down with a trainee, listen to their problems, explain subtle concepts and the "art" of medical physics, or provide them with the mentorship and guidance and support required to foster their success as independent professionals.

"Deep learning and AI are still a long way from being creative and this has been the case from the very beginning of AI implementations," said Krupinski in the debate. "As [professor of cognitive science at the University of Sussex, UK] Margarita Boden pointed out in 1998, the two major bottlenecks to AI creativity are domain expertise and evaluation of results (i.e. critical judgment of one's own original ideas)."

Krupinski notes that a significant portion of a medical professional's job - whether solving a complicated clinical problem, developing a new line of research investigation, or communicating and collaborating with colleagues and patients - involves creativity and ingenuity. So far, AI has not been able to master these activities and display true creativity.

"Let the computers take over the tedious, monotonous, and time-consuming tasks," suggests Krupinski. "Humans will have more time to create, discover, and lead healthcare to the next level."

This article originally appeared in the 2019 Photonics West Show Daily.

| Enjoy this article? Get similar news in your inbox |

|