Making iris recognition more reliable and spoof resistant

Iris recognition seems to have all of the attributes we'd like to have in biometrics: it is fast, highly reliable and, by its nature, non-invasive. There is currently a tremendous growth of commercial implementations of iris recognition, due in part to the fact that its concept patent1 recently expired in the US (2005) and in Europe and Asia (2006). A number of recent field tests carried out have shown how outstanding iris recognition can be in terms of accuracy and speed, but it is still rare for these systems to be able to tell whether or not the eye being scanned is alive.

Here, we present two independent iris coding methods that perform this function: one based on the Zak-Gabor transformation, and the other on compact zero crossing. The first technique selects transformation coefficients automatically to make the system adaptive to images of varying quality, even those not compliant with ISO/IEC 19794-6 standard2 such as iris images taken with mobile phones. The second method is a compact version of the original zero-crossing algorithm, as proposed by Boles,3 and betters the original in terms of accuracy, speed, and template size. We also examine the spoof resistance of selected commercial iris-recognition devices and the potential of proposed anti-spoofing methods.

Iris imaging and preprocessing

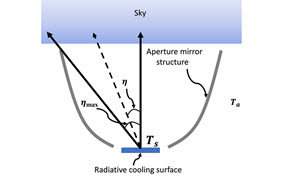

Before any image processing, the iris images must first be captured. A system was designed and constructed to manage the iris image capture process from a convenient distance and with minimal human cooperation. It is noteworthy that in commercial iris imaging devices, the imaging hardware is typically inseparably connected to the particular authentication methodology to be used. Our hardware checks that the eye exists at the appropriate distance and position in real time. It then automatically starts to acquire a sequence of frames using varying focal length, thus compensating for its small depth-of-field. The iris image capture, including the eye positioning, takes no more than five seconds. The images taken are compliant with the rectilinear iris image requirements as recommended by the ISO,2 see Figure 1.

We make use of local image gradient estimation to outline both inner (i.e., between the pupil and the iris) and outer (i.e., between the iris and the sclera) iris boundaries that can be distinguished within the camera raw image. To localize occlusions, we propose a routine that does not assume any particular occlusion shape and allows disruptions to be localized through the detection of local irregularities in the iris body. Two image quality measures are calculated: a focus factor and the occlusion coverage level. The iris rectilinear images that pass the quality check are transformed into polar coordinates to form a family of discrete functions (iris stripes) representing the angular fluctuations of the iris structure at various iris radial zones.

Zak-Gabor-based codingIris coding is intended to designate a set of local iris features to form a compact image representation. We use the Zak transform to convert iris stripes into Gabor-transformation coefficients.4 The use of these coefficients rather than Gabor filtering,5 which is typically used in commercial systems, has many advantages: e.g., we can embed a ‘replay attack’ prevention mechanism into the coding, stopping the electronic replay of an authentication procedure, independent of any cryptographic measures taken. This is due to a limited connection between the code and the image space.

The proposed coding adapts to different optical setups by analyzing the Fisher information related to the transformation coefficients. The coding is additionally personalized by making it dependent on the most informative elements of each analyzed iris. The overall recognition procedure also includes eyeball-rotation compensation.

Zero-crossing-based coding

We applied a scaled Laplacian of Gaussians filters to iris stripes to obtain their zero-crossing representation. We employ two representations of zero-crossings: the rectangular, as proposed earlier by Boles;3 and the sign-only, a compact one-bit quantization of the rectangular.6

Each filtered stripe (f-stripe) can be treated as a point in a vector space, with a distance defined by the correlation coefficient. An enrollment image is selected that minimizes a maximal distance of this f-stripe to the set of the remaining enrollment f-stripes. In this way, for each one we select the enrollment image of smallest variability. This is repeated for all f-stripes, and weights inversely proportional to these variabilities are assigned.

Recognition results

To evaluate our methodologies we use our database of 720 iris images captured for 180 eyes. Out of four images of each eye, three were used to create the templates and the remaining one used for evaluation. We achieved an equal error rate (EER) of 0.56% for the rectangular zero-crossing coding: this is the equal rate of false rejections and false acceptances. The sign-only variant reached an EER of 0.03%, thus revealing a recognition accuracy that is better by one order of magnitude. Finally, the personalized Zak-Gabor approach seems to do much better than either zero-crossing methods, as we did not encounter any sample errors at all for the collected data set (EER was 0.0%, see Figure 2). However, we used the same eyes (though not the same images) to estimate and evaluate the proposed codings, so these results should be taken with care. It is noteworthy, on the other hand, that a commercial system (Panasonic ET100, purchased in 2003)—tested with the same database—had a 39.13% failure-to-enroll rate and falsely rejected 15.38% of those successfully enrolled.

Aliveness detection

Detecting whether or not eyes are alive became a serious and disturbing issue after the 2002 publication of the results of an experiment performed (much earlier) at the Fraunhofer Research Institute (Darmstadt, Germany) in collaboration with the German Federal Institute for Information Technology Security (BSI).7 This dealt with well-established face-, fingerprint-, and iris-recognition systems. Combined with and subsequent8,9 experiments, these showed that there was an alarming lack of anti-spoofing mechanisms in devices that were supposed to be protecting sensitive areas all over the world.

We propose three methods of eye-aliveness detection10 based on frequency analysis (FA), controlled light reflection (CLR), and pupil dynamics(PD). The FA approach, suggested earlier by Daugman,5 detects artificial frequencies in iris images that may exist due to the finite resolution of the printing devices. The CLR method relies on the detection of infrared light reflections on the moist cornea when stimulated with light sources positioned randomly in space. Finally, the PD approach employs a model of the human pupil response to light changes. To differentiate between alive and fake irises we have thus to verify a hypothesis that the observed object reacts as a human pupil. Each observation of the eye in time gives model parameters that are checked against those obtained for a real pupil.

In the development stage, a body of various fake (printed) eye images was used, including different printers and three printout carriers: glossy and matt papers and a transparent foil, see Figure 3. Approximately 600 printouts were made for 30 different eyes. We found the fake eyes printed in color on paper carriers are sufficient to forge commercial systems. Thus, in the testing phase, this kind of color printout was prepared for 12 different eyes.

All the tested methodologies, namely the FA, the CLR, and the PD, showed a 0% fake-iris-acceptance rate. The CLR and the PD showed null live iris rejection rates (the FA's was 2.75%). This compares very favorably to commercial equipment: Camera A accepts 73% and Camera B accepts more than 15% of fake irises presented, see Table 1. A procedure that combines all the techniques investigated might be the most effective and useful for more sophisticated forms of eye forgeries.

Conclusion

We have briefly described some novel approaches to iris coding and to detecting whether or not an eye presented comes from a live subject. Coding approaches that rely on Zak-Gabor or zero-crossing principles have been found to be very effective in computation-constrained environments. The Zak-Gabor-based iris templates were successfully used in biometric smart card development (with on-card iris matching) and in remote biometric-based access implementation, partially as a result of European cooperation within the BioSec integrated project.11

Dr Adam Czajka received his MSc in computer control systems in 2000 and got his PhD in control and robotics in 2005 from Warsaw University of Technology, where he has been since 2003. He has worked with the Research and Academic Computer Network on biometrics since 2002. He is a member of the NASK Research Council, the IEEE (Institute of Electrical and Electronics Engineers), and serves as the Secretary of the IEEE Poland Section.

Mr Przemek Strzelczyk received his MSc in Information Technology in 2004 from Warsaw University of Technology and has worked for the Biometric Laboratories at NASK since then. He started his PhD at Warsaw University of Technology in 2005, and his current interests include iris biometrics, authentication protocols, and smart cards.

Mr Andrzej Pacut received his MSc in control and computer engineering in 1969, his PhD in electronics in 1975, and his DSc in control and robotics in 2000. He has been with Warsaw University of Technology since 1969 and is now a professor in the Institute of Control and Computation Engineering. He joined NASK in 1999, where he is head of the Biometric Laboratories. He is a senior member of the IEEE, a member of the International Neural Network Society, and serves as the President of the IEEE Poland Section.